Essay by Eric Worrall

“… Google adds machine learning to climate models for ‘faster forecasts’ …”

The secret to better weather forecasting may lie in AI

Google adds machine learning to climate models for ‘faster predictions’

Tobias Mann

Sat 27 Jul 2024 // 13:27 UTC…

In a paper published in the journal Nature this week, a team from Google and the European Center for Medium-Range Weather Forecasts (ECMWF) describe a novel approach that uses machine learning to overcome limitations in existing climate models and try to produce forecasts faster and more accurately than existing method.

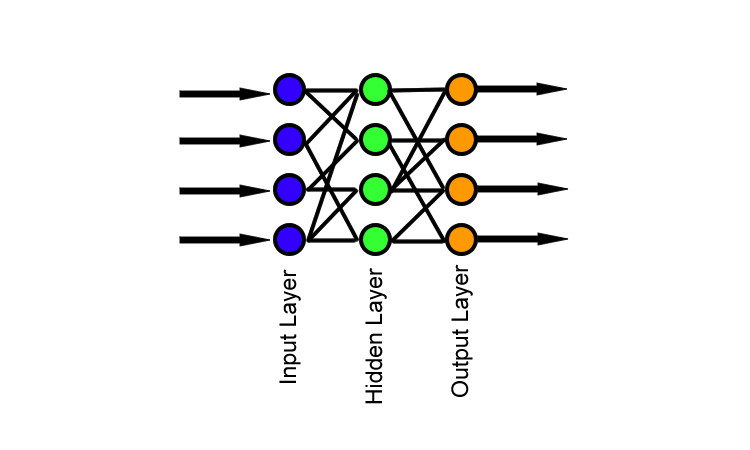

Named NeuralGCM, the model was developed using historical weather data collected by ECMWF, and uses neural networks to augment more traditional HPC-style physics simulations.

As Stephan Hoyer, one of the crew behind NeuralGCM wrote in a new report, most current climate models make predictions by dividing the globe into cubes of 50-100 kilometers on each side and then simulating how the air and humidity inside them are based on known laws. from physics.

NeuralGCM works in a similar way, but with added machine learning used to track climate processes that are poorly understood or that occur on smaller scales.

…

Read more: https://www.therregister.com/2024/07/27/google_ai_weather/

Abstract of the study;

A neural general circulation model for weather and climate

Dmitrii Kochkov, Janni Yuval, Ian Langmore, Peter Norgaard, Jamie Smith, Griffin Mooers, Milan Klöwer, James Lottes, Stephan Rasp, Peter Düben, Sam Hatfield, Peter Battaglia, Alvaro Sanchez-Gonzalez, Matthew Willson, Michael P. Brenner & Stephan Hoyer

Abstract

General circulation models (GCMs) are the basis of weather and climate prediction1,2. A GCM is a physics-based simulator that combines a numerical solver for large-scale dynamics with a tuned representation for small-scale processes such as cloud formation. Recently, machine learning models trained on reanalysis data have achieved comparable or better skill than GCMs for deterministic weather forecasting.3,4. However, these models have not shown better ensemble forecasts, or shown sufficient stability for long-term weather and climate simulations. Here we present a GCM that combines a differentiable solver for atmospheric dynamics with a machine learning component and show that it can produce deterministic weather forecasts, ensemble weather and climate that match the best machine learning and physics methods. NeuralGCM competes with machine learning models for forecasts of one to ten days, and with the European Center for Medium-Range Weather Prediction Europe for forecasts of one to fifteen days. With pre-determined sea surface temperatures, NeuralGCM can accurately track climate metrics over decades, and climate forecasts with 140 kilometer resolution show emerging phenomena such as realistic frequencies and trajectories of tropical cyclones. For weather and climate, our approach offers orders of magnitude computational savings over conventional GCMs, although our model does not extrapolate to very different winter climates. Our results show that end-to-end deep learning is compatible with the tasks performed by conventional GCMs and can improve large-scale physical simulations that are important for understanding and predicting the Earth system.

Read more: https://www.nature.com/articles/s41586-024-07744-y

Reading the main study, they seem to claim that adding the magic sauce of neural nets produces better short-term weather forecasts and better climate predictions.

The researchers tried to test the model by retaining some training data, and used a trained neural network to make weather forecasts based on real-world data never seen before by AI. He also discusses how the model’s predictions differ from reality after 3 days – “At longer times, the RMSE increases rapidly due to the differences in chaotic weather trajectories, making the RMSE less informative for a deterministic model”, but says that the approach is still better than traditional. approach, after which the chaotic divergence is taken into account.

I’m a bit hesitant about applying prediction skills from neural net black boxes. History is filled with scientists who followed all the steps described by the author, only to see the neural network diverge wildly from the expected behavior on the day of the demonstration. I would prefer that they put more effort into reversing the neural nets, to tease out what they find, if anything, to see if they discover new atmospheric physics that can be used to create better deterministic white box models.

In 2018, Amazon suffered a major embarrassment when one of its neural networks went down. They tried using neural nets to screen tech candidates, but found the neural net showed a bias against female candidates. The neural network has noticed most of the male candidates, and concluded that it should exclude applications from female candidates based on their gender.

Anyone who thinks there is a basis for Amazon’s neural net gender bias should visit a tech store in Asia. Somehow in the West we convince girls from a young age that they are not suitable for technology jobs. The few women who made it through the filter of Western culture, women like Margaret Hamilton who led the Apollo guidance computer-programmed team, more than demonstrated their capabilities. Hamilton is credited with coining the term “software engineering”.

Neural Nets are very useful, they can identify ambiguous relationships. But the ability to see obscure patterns in data carries the risk of false positives, seeing patterns that don’t exist. Especially when analyzing physical phenomena, if you don’t try to reverse the workings of the magic black box neural network when it has demonstrated superior skills, you simply don’t know whether what you are seeing is genuine or subtle skills. false positive.

Related